ANVI: Accessible Navigation for the Visually Impaired

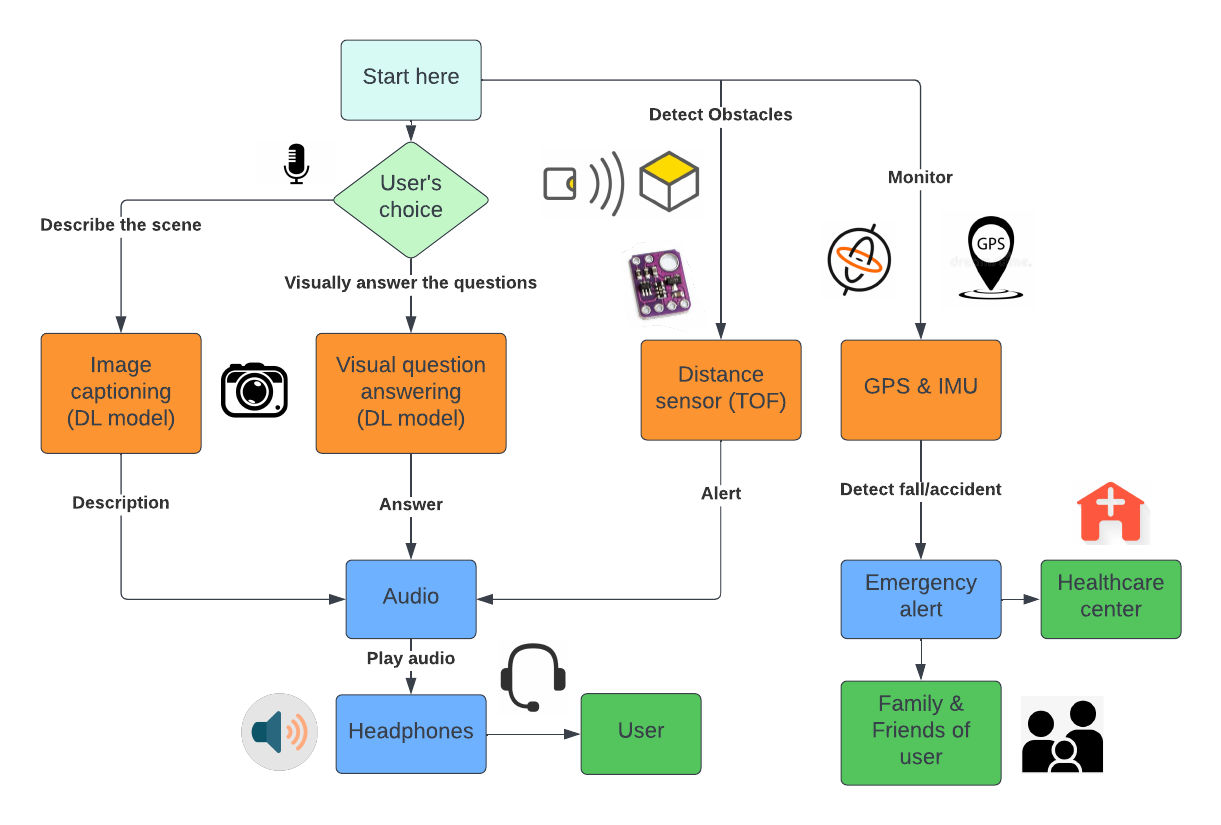

ANVI is an affordable wearable that blends deep learning perception, LIDAR sensing, and safety telemetry to deliver real-time audio navigation assistance for users with visual impairments.

Objective

Led the design of an assistive wearable that provides contextual audio instructions driven by the user's surroundings. The goal was to couple affordable hardware with state-of-the-art vision-language models to expand mobility and safety for visually impaired users.

Technology Stack

- Deployed CLIP and LXMERT for image captioning and visual question answering.

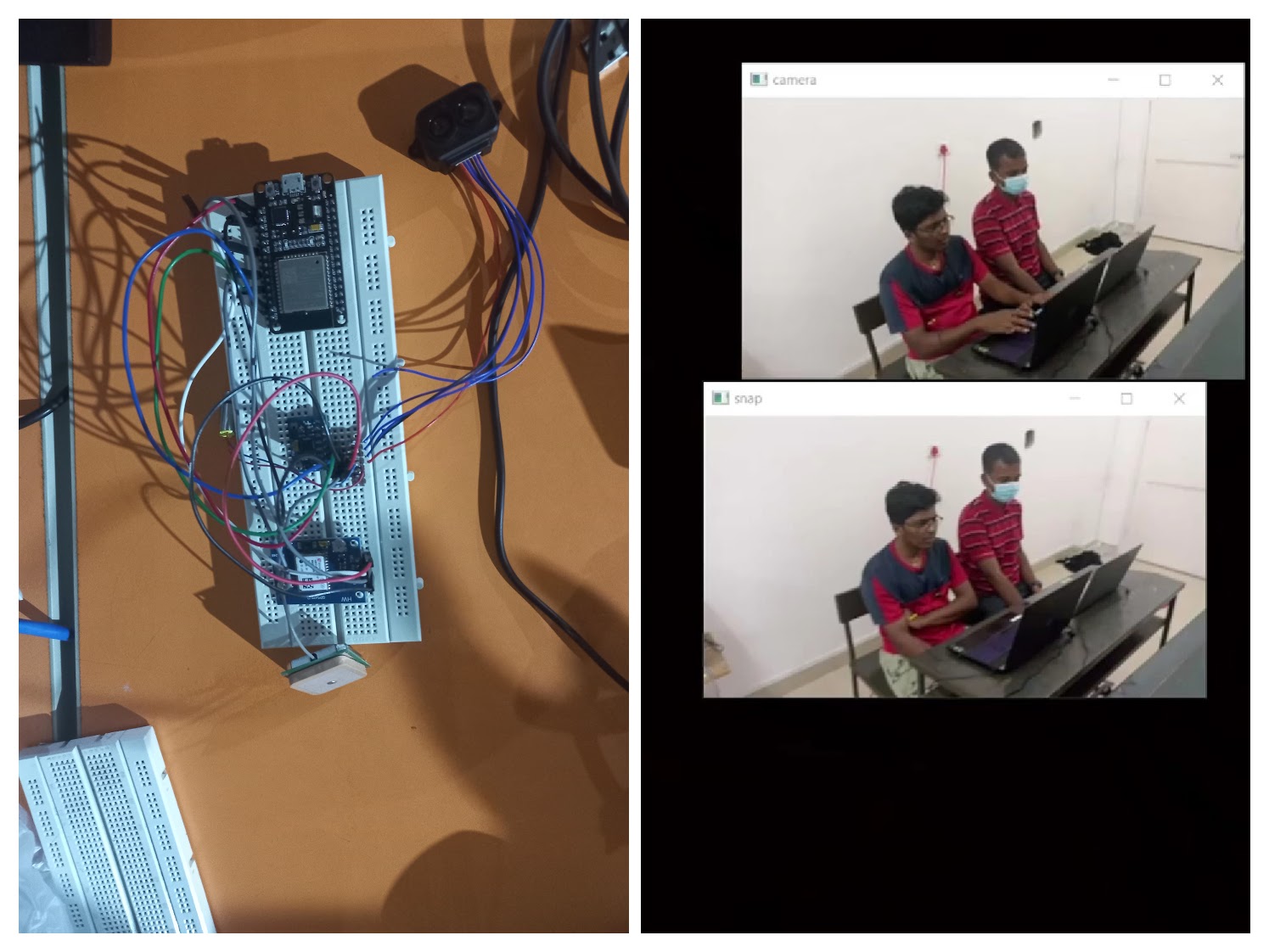

- Targeted embedded compute on Raspberry Pi and Jetson Nano.

- Integrated time-of-flight LIDAR, MPU6050 IMU, and Li-Po power management.

- Implemented fall detection, vibration haptics, and GPS telemetry for safety.

Key Features

- Wearable headset streams imagery to edge devices that infer captions and intent-aware prompts.

- Obstacle detection blends LIDAR ranging with haptic motors for directional feedback.

- Fall detection triggers emergency alerts and location sharing via onboard GPS.

- Supports wireless camera placement and multi-platform compute for rapid prototyping.

- Designed around affordability to ensure broad accessibility and community adoption.

Impact

The ANVI platform demonstrates how low-cost sensing and modern multimodal models can co-exist in a wearable form factor, delivering proactive guidance while maintaining critical safety monitoring for visually impaired users.