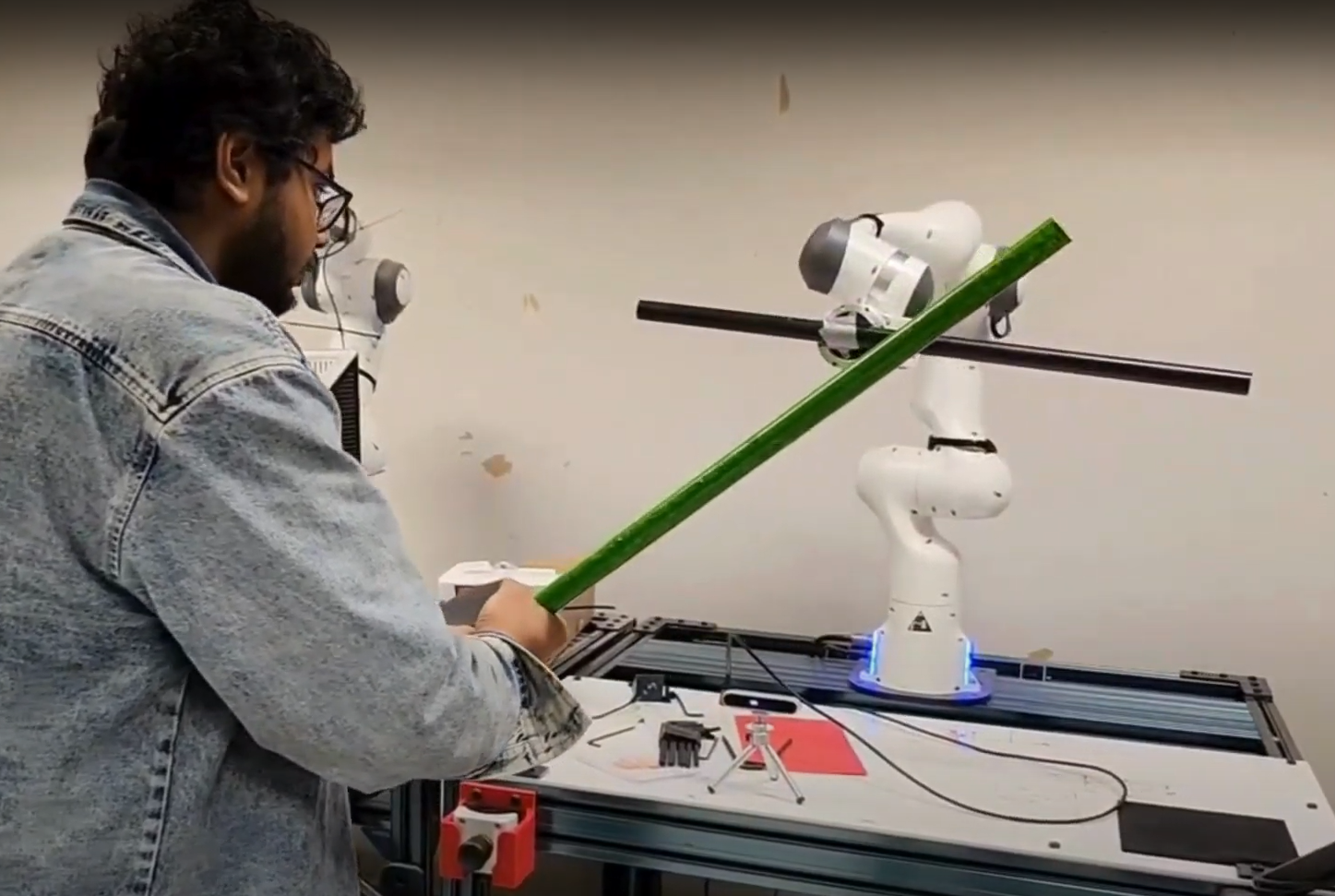

DUM-E: Vision-Guided Robotic Fencing System for Human-Robot Interaction

Franka Emika Panda

RGB-D Perception

HSV Segmentation

ROS

Finite State Machine

Human-Robot Interaction

Research Objective

The DUM-E system (Dynamic Utility Manipulator – Experimental) aims to enable interactive fencing between a human and a robot by combining real-time vision tracking with reactive motion generation. Using an external Intel RealSense RGB-D camera and a Franka Emika Panda arm, the robot detects a human opponent’s baton through color-based segmentation and generates counter-trajectories using a finite state machine and mirroring strategy. The objective is to demonstrate fast, safe, and intelligent human-robot interaction under dynamic motion, bridging perception and control in adversarial tasks.

Key Features Achieved

- Implemented real-time 3D pose estimation of a human-held baton using HSV color segmentation and depth fusion from an Intel RealSense D435i camera.

- Developed an extrinsic calibration pipeline to transform detected poses from camera frame to robot base frame with sub-centimeter accuracy.

- Designed a finite state machine (FSM) for passive–active state transitions, triggering defense actions when the baton breaches a virtual defense plane.

- Built a reactive trajectory planner that mirrors the opponent’s baton motion across the camera plane, achieving smooth and natural defensive movements.

- Integrated quaternion-based orientation control via FrankaPy for end-effector stability and consistent blocking posture.

- Achieved a minimum viable product capable of low-latency visual tracking, smooth imitation, and responsive defense in human–robot fencing scenarios.

Challenges & Learnings

- Perception noise: HSV segmentation exhibited sensitivity to lighting and occlusion, causing centroid jitter of ±1.1 cm (x–y) and ±1.7 cm (z).

- Latency optimization: The 165 ms response latency highlighted trade-offs between perception refresh rate and motion planning overhead.

- Safety vs. workspace limits: Virtual wall constraints ensured safe interaction but restricted arm reach during aggressive attacks.

- Hardware adaptation: Switched from Kinect to RealSense due to compatibility issues, requiring rapid recalibration of the visual pipeline.

- Key learning: Maintaining real-time responsiveness in perception-driven systems requires balancing sensor stability, control frequency, and motion smoothness.

Quantitative Results

- Pose estimation stability: ±1.1 cm (x–y), ±1.7 cm (z) centroid variance across 15 static trials.

- System response latency: 165 ms end-to-end (camera to trajectory trigger).

- Defensive success rate: ~80% interception success across 10 engagement trials.

- Motion smoothness: Minimal overshoot with < 2 cm steady-state positional error during mirrored trajectories.

- User perception: Qualitative feedback indicated fluid and intuitive interaction, especially during continuous mirroring phases.