MAGIC: Manipulation for Automated Geometric Inspection and Construction

Research Objective

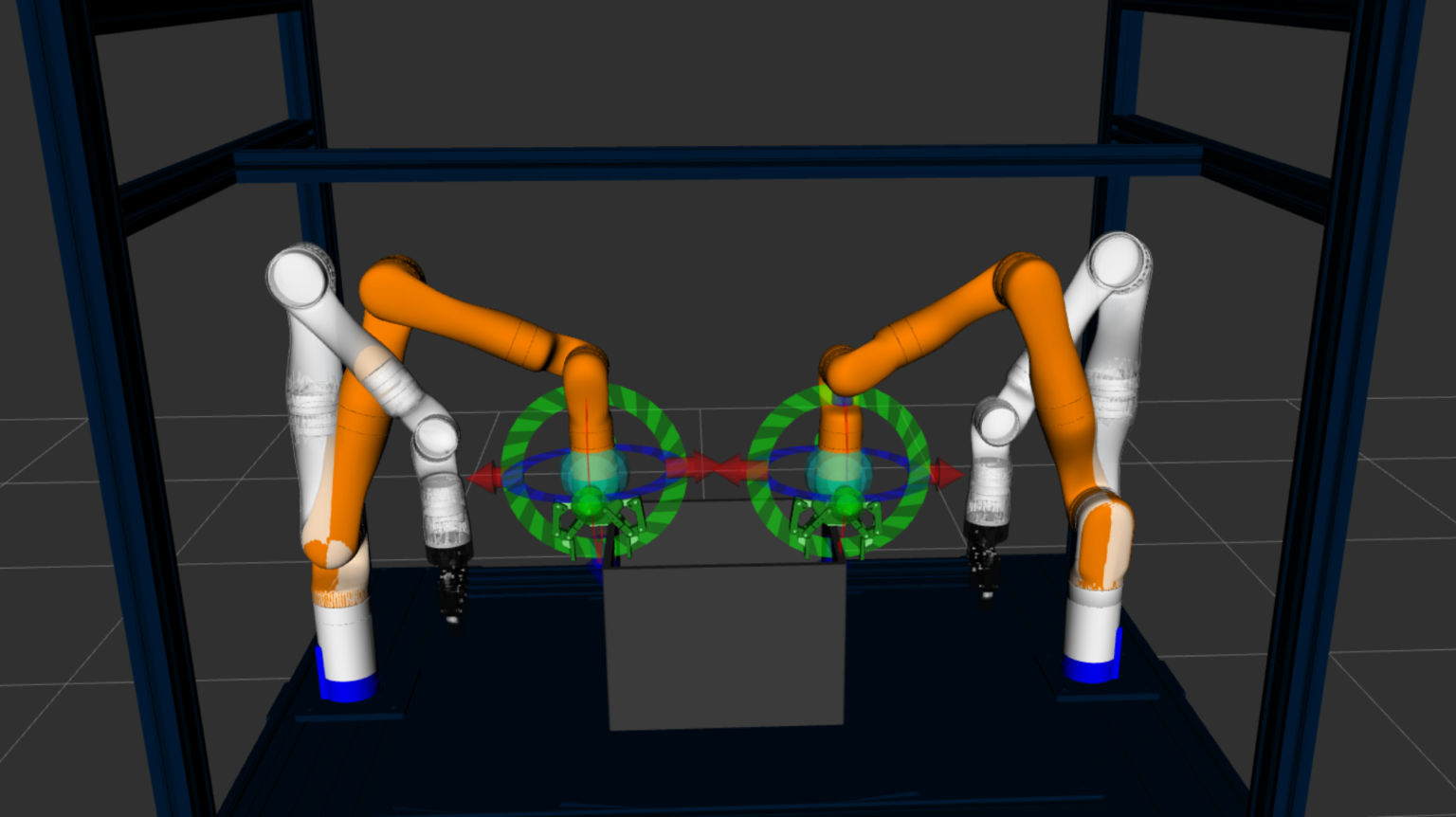

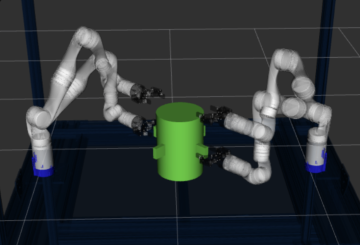

The MAGIC system (Manipulation for Automated Geometric Inspection and Construction) aims to create a scalable, dual-arm robotic platform for high-fidelity geometric inspection of additively manufactured components. The system leverages coordinated manipulation and perception to address a core limitation of current inspection systems — incomplete surface coverage due to occlusions or fixed sensor viewpoints.

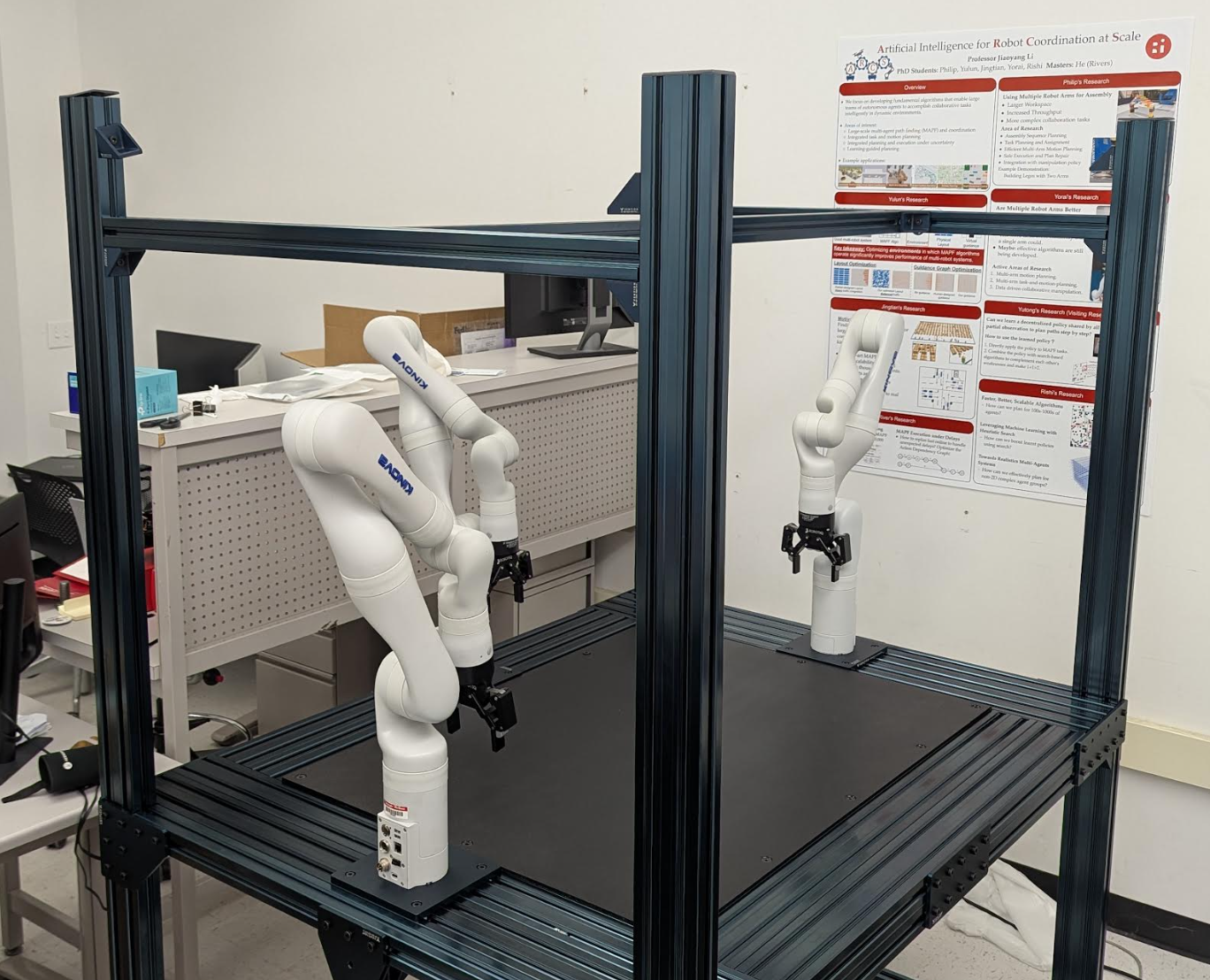

MAGIC uses two Kinova Gen3 arms to dynamically reorient objects in front of a fixed RGB-D sensor array, ensuring complete 3D reconstruction without moving cameras. It follows a rigorous systems engineering process — defining use cases, functional requirements, subsystem architectures, and validation metrics — to develop an integrated robotic system capable of adaptive inspection and defect detection for Wire Arc Additive Manufacturing (WAAM) parts.

Key Features Achieved

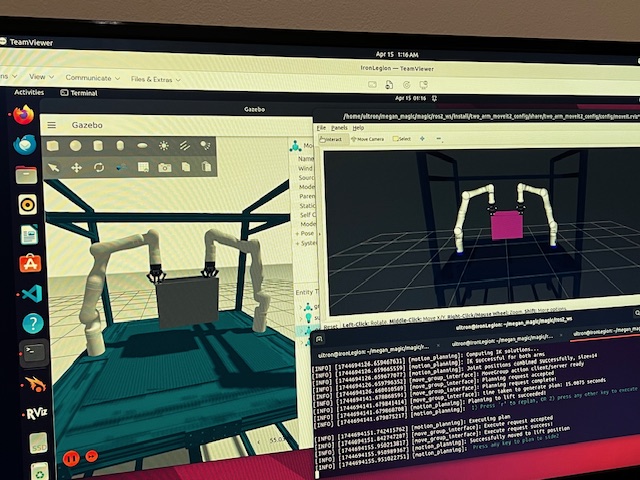

- Designed a modular ROS2 + MoveIt2 architecture supporting dual-arm, synchronized Cartesian and joint-space motion planning.

- Developed a pose estimation pipeline using fiducial markers and RGB-D input, achieving 6-DoF localization with sub-centimeter accuracy.

- Implemented finite-state machine (FSM) for sequential motion control, enabling smooth grasp, rotation, and placement transitions.

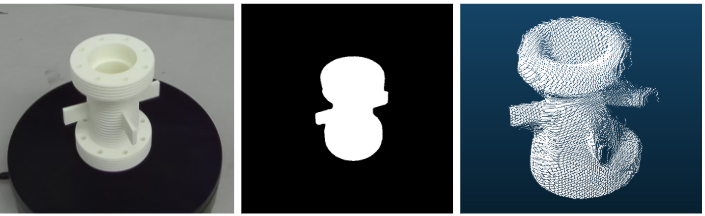

- Integrated 3D reconstruction subsystem using SAM2.1 segmentation and calibrated depth fusion, achieving ~2.0 cm spatial precision.

- Achieved 4.4 ± 3.4 s dual-arm trajectory planning time and 4/5 manipulation success rate on hardware trials.

- Validated reconstruction completeness and precision through quantitative comparison with reference CAD models.

- Established real-time visualization GUI for operator feedback, surface deviation mapping, and inspection report generation.

Challenges & Learnings

- Integration complexity: Synchronizing perception, motion planning, and control required extensive debugging and iterative validation.

- Sim-to-real transfer: Achieved near-identical behavior between Gazebo and hardware, but workspace constraints limited manipulation range.

- Pose dependency: Reliance on ArUco markers reduced generalizability under occlusions and lighting variation.

- Reconstruction precision: Stereo depth-based methods were sensitive to low-texture surfaces; ToF/structured-light upgrades planned.

- Risk mitigation: Managed collision risks through layered safety protocols, including manual E-stop and simulated motion verification.

- Key learning: Integration effort and risk tracking outweighed pure algorithmic complexity, reinforcing the importance of system discipline in robotics.

Quantitative Results

- Manipulation success rate: 90% across 10 real-hardware trials.

- Trajectory planning latency: 4.4 ± 3.4 seconds (measured over 20 runs).

- 3D reconstruction precision: 1.9–2.0 cm average deviation (vs. 3 cm requirement).

- Reconstruction runtime: 8 minutes end-to-end (below 10-minute threshold).

- Object surface damage rate: <20% across repeated trials.