Pepper: Multi-Modal Personal Assistant Robot

Pepper integrates SLAM, speech recognition, and deep learning-based object manipulation into a single platform designed for home assistant scenarios that demand reliable navigation and intuitive human-robot interaction.

Technology Stack

- SLAM via ROS gmapping paired with Microsoft Kinect depth sensing.

- Deep learning perception with CNN classifiers for 1000 object categories.

- Voice interaction using MFCC-based speaker identification and recognition pipelines.

- Custom three-layer chassis designed in AutoCAD and fabricated with acrylic for modularity.

Key Features

- Autonomous indoor navigation that fuses encoder and IMU data for drift-free localization.

- Personalized speech interface capable of identifying speakers and responding in real time.

- Object recognition and depth-guided grasping integrated with a 3-DoF manipulator.

- Modular design enabling rapid upgrades to sensors, actuators, and compute payloads.

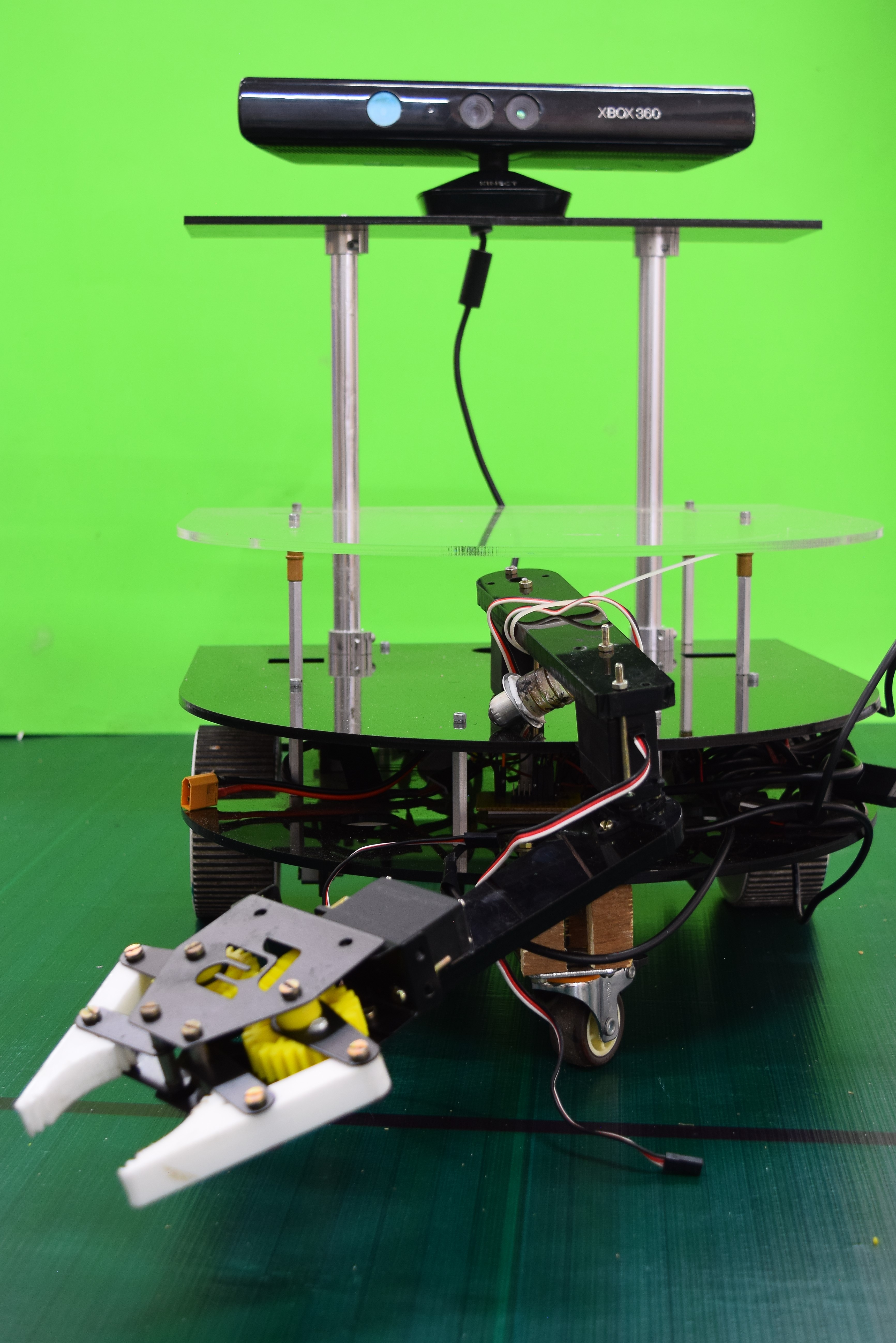

Robot Design

The three-tier chassis houses power electronics, motor drivers, a 3-DoF manipulator, and the onboard compute stack. Pepper employs high-torque drive motors with a caster wheel for stability, while a top-mounted Kinect sensor delivers RGB-D data for mapping and manipulation tasks.

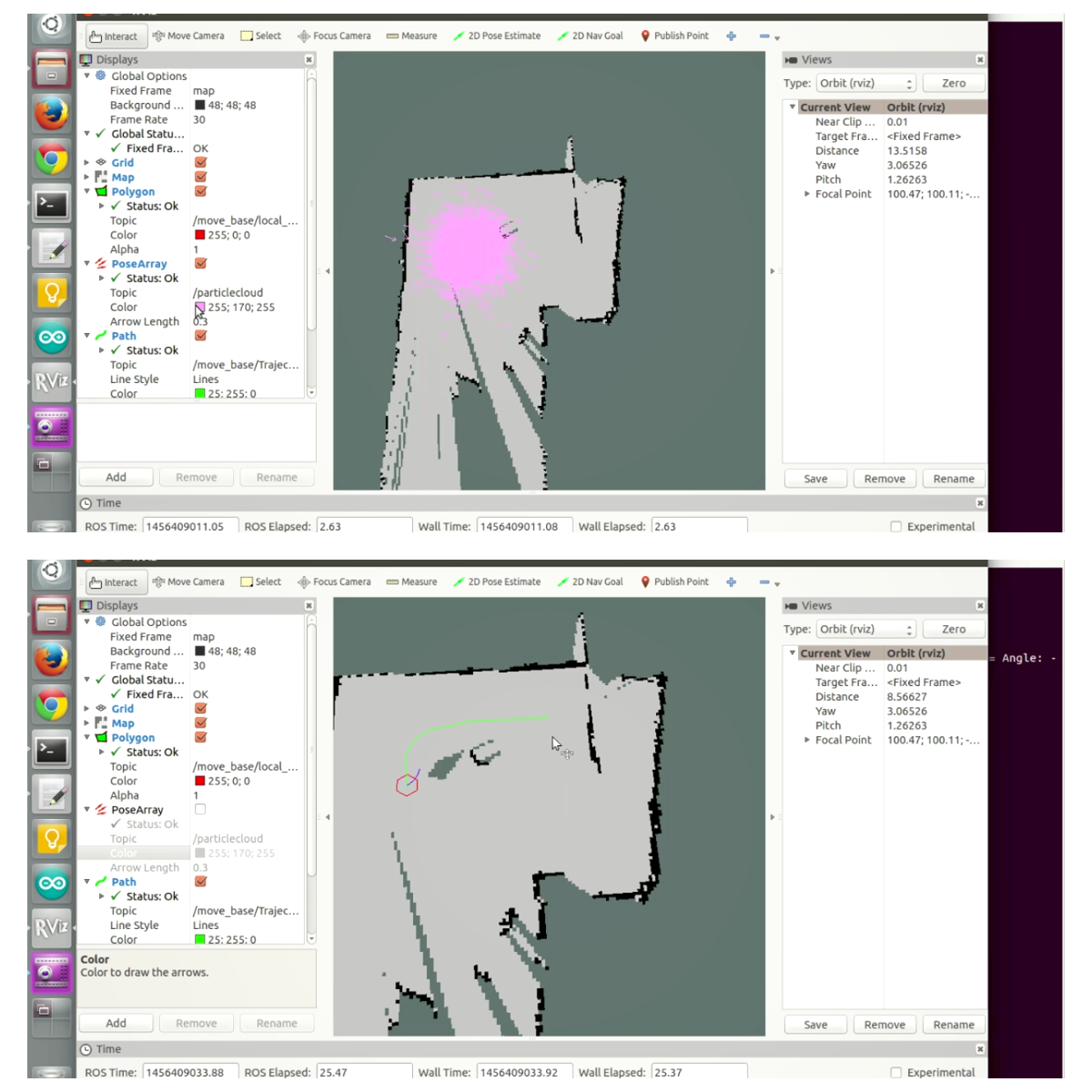

Autonomous Navigation

- Builds indoor maps using Kinect depth data and the ROS gmapping package.

- Applies Kalman filtering to fuse encoder and IMU signals, mitigating wheel slip and drift.

- Executes path planning and navigation across multi-room environments with obstacle avoidance.

Human Interaction

- Recognizes users via speech using MFCC vector embeddings and a text-independent classifier.

- Perceives objects through CNN-based recognition and depth-aware grasp targeting.

- Manipulates objects using a 3-DoF robotic arm and single-DoF gripper aligned with depth cues.

Outcome

The project demonstrates a cohesive assistant robot capable of mapping its environment, understanding verbal commands, and manipulating everyday objects, underscoring how accessible hardware can support rich personal robotics experiences.