SSC: Sign-to-Speech Communication Interface

Microcontrollers

Sensor Fusion

DL

LSTM

Wireless Communication

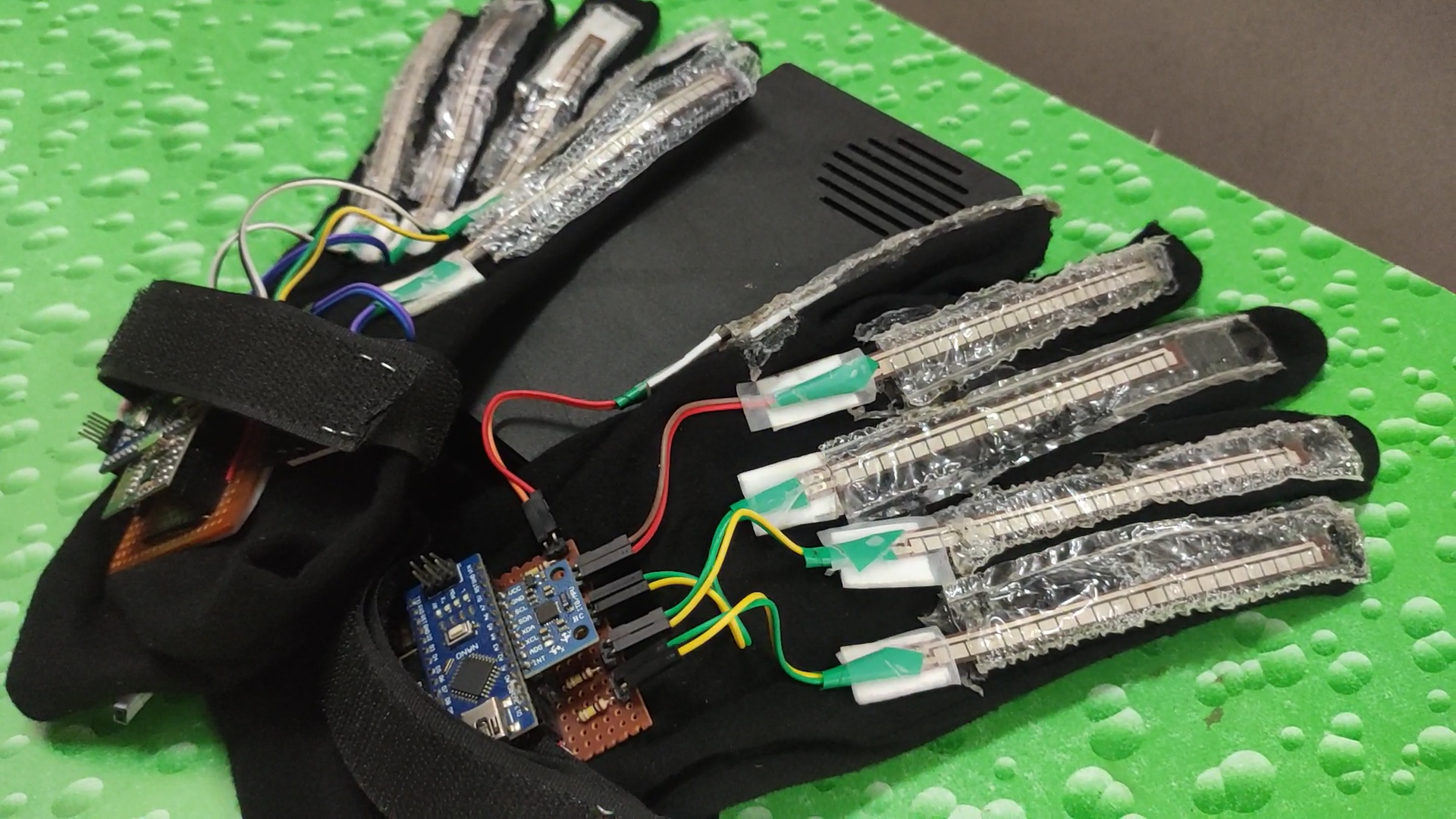

SSC delivers a mobile, battery-powered system that translates calibrated hand gestures into synthesized speech, bridging communication gaps for individuals with speech disabilities.

Objective

Mentored the development of a sign language-to-speech converter that provides fast, personalized voice output without relying on keyboards or touch interfaces, enhancing autonomy for users with limited speech ability.

Technology Stack

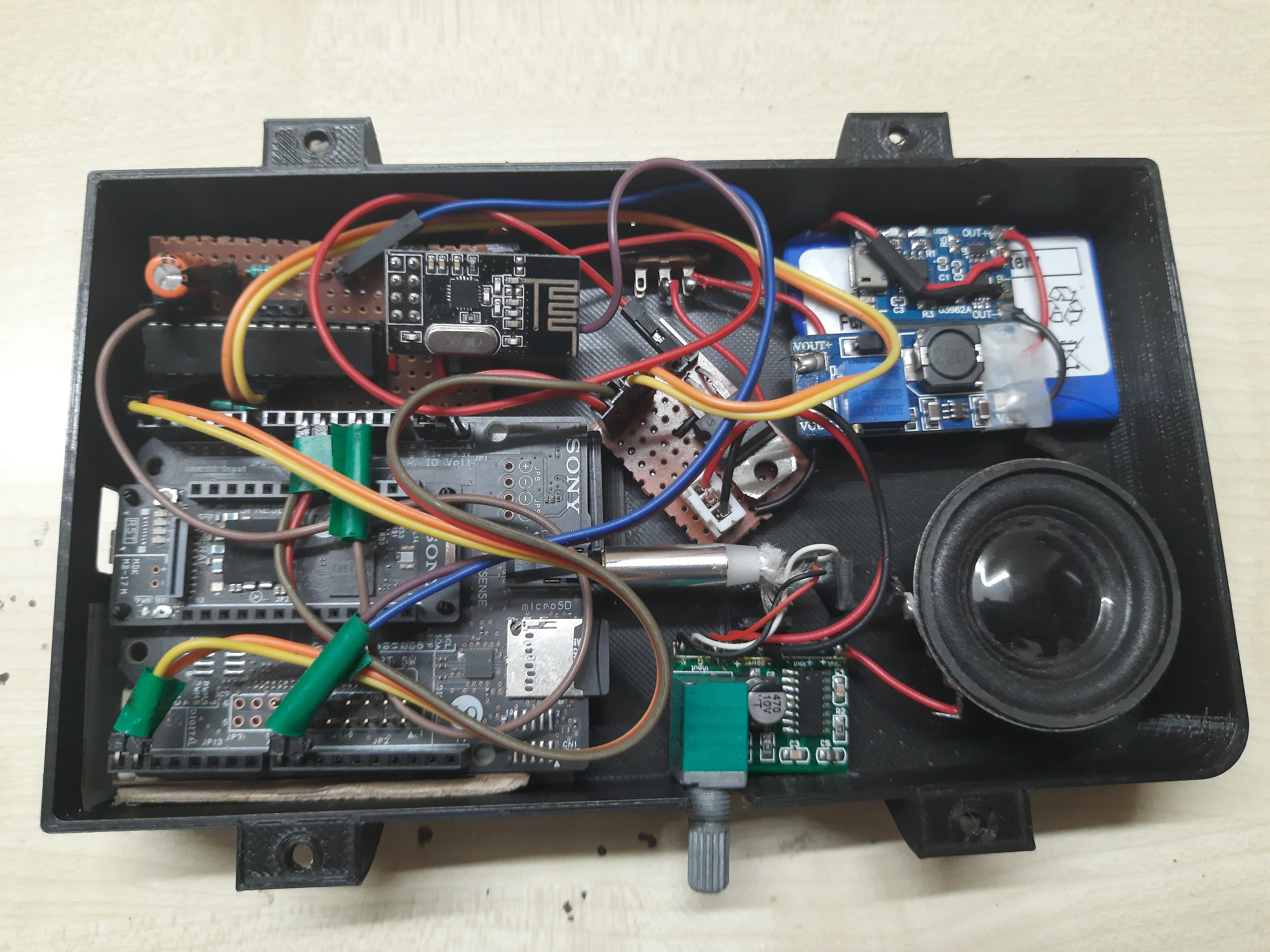

- Sony sPresence and nRF24L01+ wireless modules for low-latency communication.

- Sensor fusion combining flex sensors and IMUs to capture finger articulation and hand pose.

- LSTM-based deep learning models to decode gesture sequences into words.

- Onboard speaker with configurable voice templates for natural playback.

Key Features

- Personalized calibration maps the device to an individual's hand geometry for accuracy.

- Continuous gesture tracking predicts angular positions to disambiguate similar signs.

- Custom vocabulary stores high-frequency phrases to reduce latency during conversations.

- Lightweight, battery-powered design ensures mobile, unrestrictive daily use.

Impact

SSC showcases how embedded sensor fusion and deep learning can provide inclusive communication pathways, empowering users to converse fluidly in diverse environments.