VEX V5 Autonomy Tools

VEX V5

Robotics

Autonomy

Path Planning

Control

Education

Objective

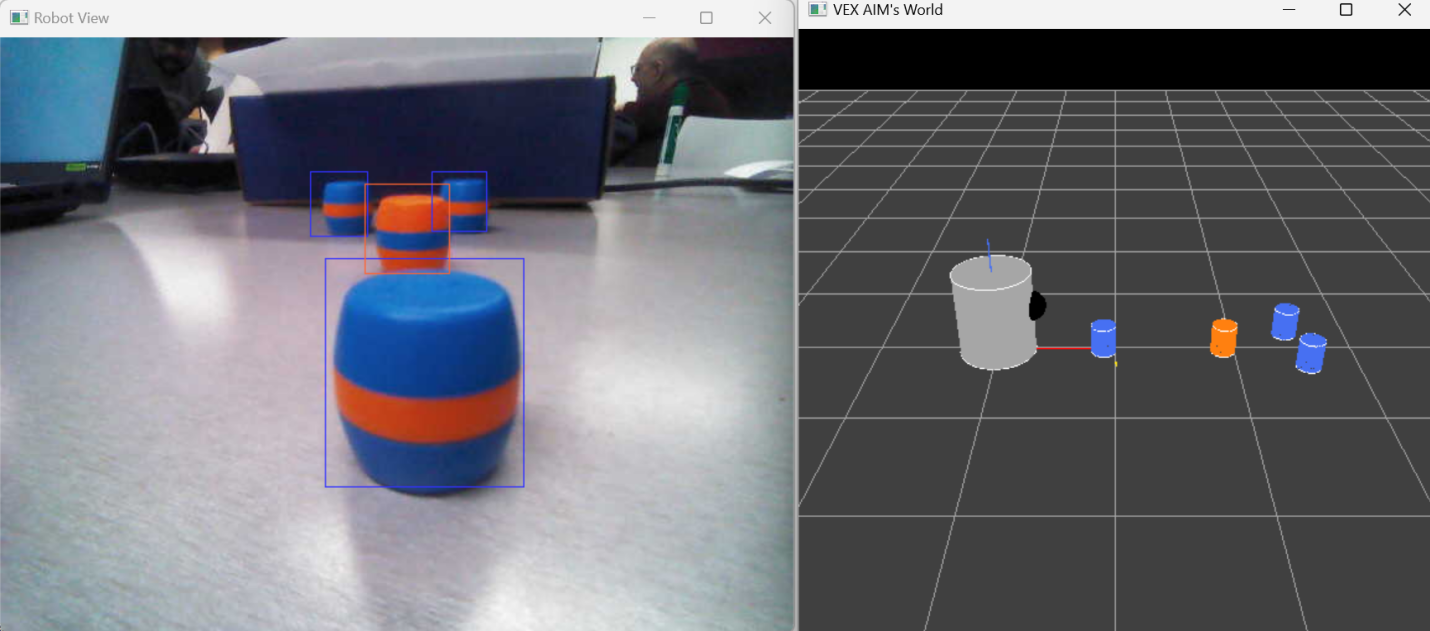

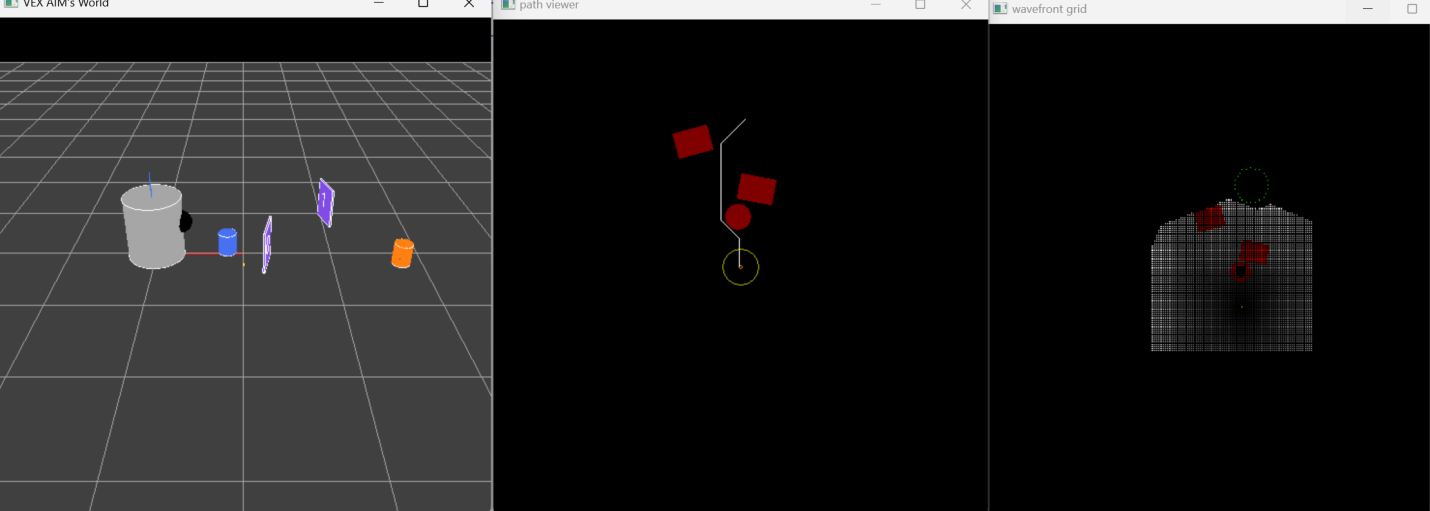

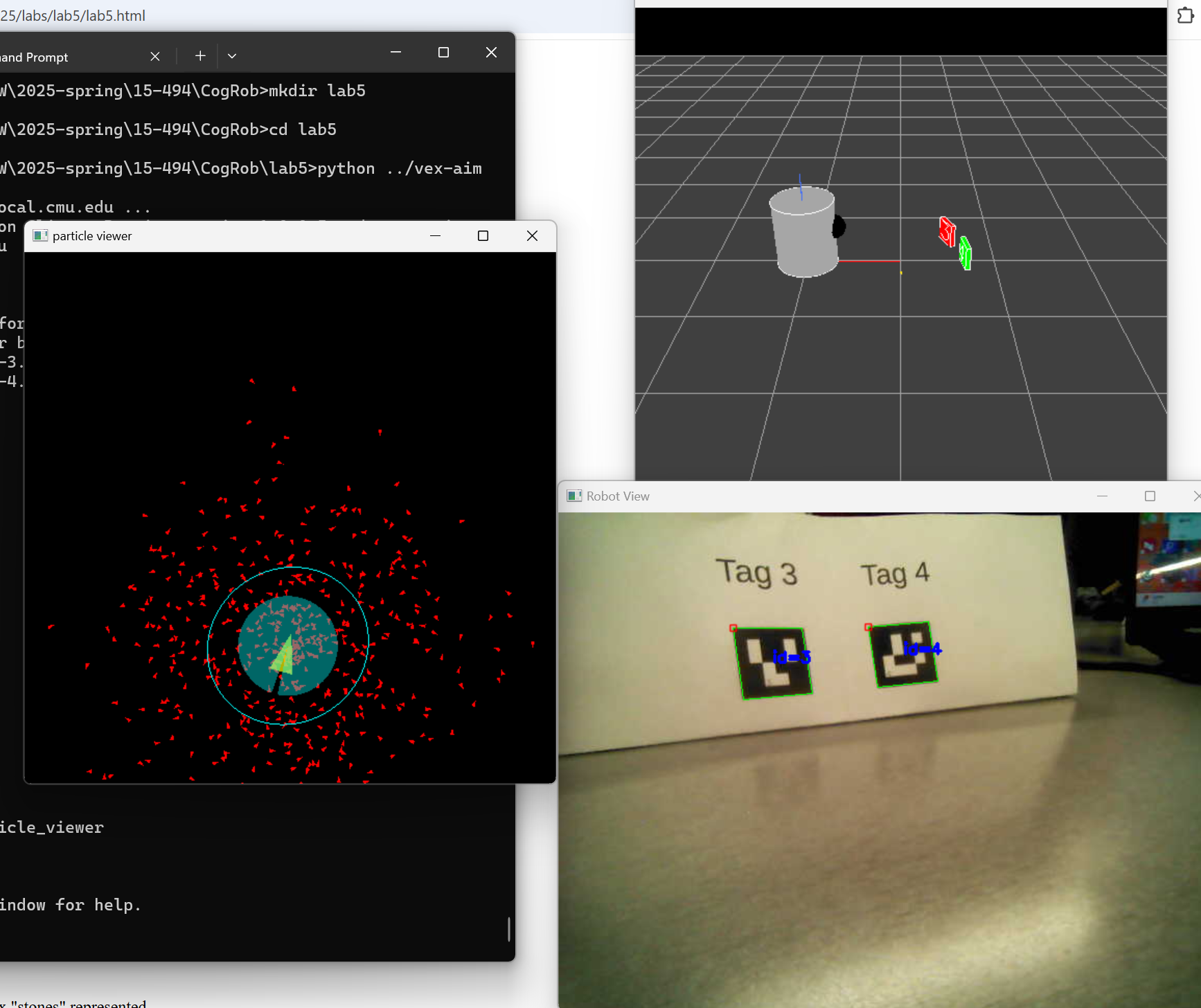

Build an end-to-end autonomy stack from scratch for the new VEX V5 robot used in 15-494/694, covering perception, state estimation, mapping, planning, control, and human-robot interaction. The stack enables classroom-ready labs and demos spanning SLAM with particle filters, grid-based and sampling-based planners, and a conversational interface that connects speech, symbolic reasoning, and scene understanding.

Key Features Achieved

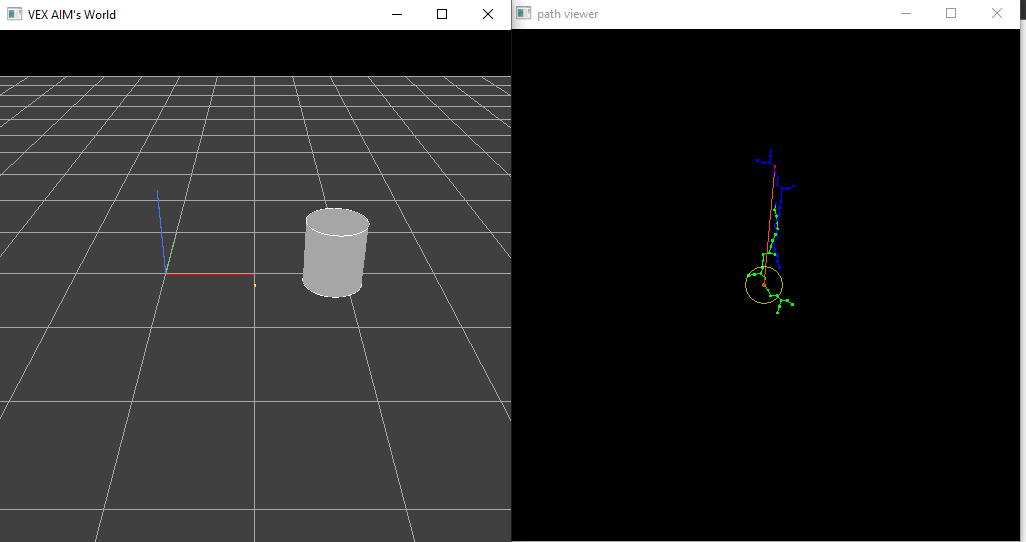

- Implemented particle-filter-based SLAM tailored to low-cost VEX V5 sensing and compute constraints.

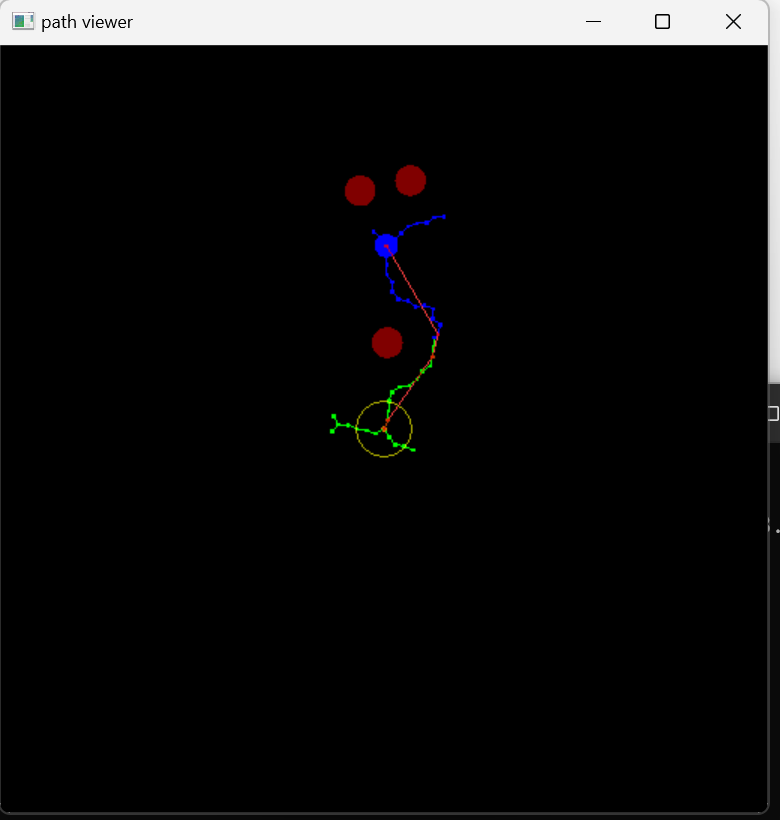

- Built global planners: Wavefront (grid-based) and RRT (sampling-based) with obstacle inflation and goal biasing.

- Integrated local motion primitives and controllers for reliable waypoint tracking and collision avoidance.

- Added conversational HRI: Google Speech-to-Text + ChatGPT API for symbolic planning and scene-aware commands.

- Packaged stack as modular labs/demos for course use with clear APIs and reference solutions.

Challenges & Learnings

- Resource limitations on VEX V5 required careful algorithmic tuning, map resolution tradeoffs, and efficient data structures.

- Speech and LLM integration needed robust grounding: intent parsing, symbol mapping, and safety checks before actuation.

- SLAM robustness improved via sensor calibration, resampling strategies, and motion-model refinement.

- Planner performance balanced completeness vs. speed; hybridizing Wavefront + RRT improved success rates.

- Curriculum integration emphasized reproducibility, documentation, and student-facing diagnostics.